The finance sector is ever-evolving and securing high-accuracy data tools for equity research in Excel is critical for maintaining the competitive edge investors need. The incorporation of robust data validation strategies and best practices for data integration directly influences confidence in decision-making processes. As we explore these tools, we’ll uncover how financial analysts can leverage Excel’s capabilities for data manipulation and visualization to secure high accuracy in equity research.

We highlight the importance of data quality, which serves as the backbone of reliable financial models. Excel offers a platform not only for data analysis but also for creating impactful reports and visualizations. By adopting a data-driven culture, research teams can ensure that analyses yield insightful results, ultimately leading to better investment decisions.

This exploration also underscores the necessity of adopting meticulous data governance strategies. As we delve deeper, we will illuminate the methods that enhance these aspects and capitalize on the potential that Excel offers in this domain.

Key Takeaways

- High data quality is essential for reliable equity research.

- Excel offers powerful tools for data analysis and visualization.

- Data integration and governance are vital for effective financial modeling.

Understanding Data Quality in Equity Research

Data quality is important in equity research. High-quality data ensures that our analyses are accurate, reliable, and relevant. This translates into better decision-making and increased trust in the outcomes of our research. According to Gartner, organizations face an average annual financial burden of $12.9 million due to low-quality data

Data management plays a key role in maintaining quality. We need to implement robust processes to ensure data integrity and consistency. By organizing and validating data systematically, we can minimize errors and achieve uniform results across our research endeavors.

To assess data quality, we should focus on several metrics:

- Accuracy: The degree to which data reflects the real-world values or outcomes it’s supposed to represent. For equity research, even small inaccuracies can lead to significant financial miscalculations.

- Completeness: Evaluates whether all necessary data points are included, leaving no gaps that could alter the research results.

- Consistency: Ensures that data is uniform across different datasets or time periods. Inconsistent data can create confusion and mislead analyses.

- Timeliness: Measures how up-to-date the data is. For equity analysts, having the most current data is crucial for making informed investment decisions.

- Validity: Ensures that data is formatted correctly and follows the rules or standards for the given context. In equity research, invalid data can result in errors when running models or performing analyses.

- Uniqueness: Ensures that there are no duplicate entries within the dataset. In equity research, duplicate data can lead to double counting or skewed results, affecting the accuracy of financial models and insights.

- Reliability: The extent to which data can be trusted over time. Reliable data sources reduce the risk of making erroneous conclusions.

Frameworks for assessing data quality provide structured approaches to evaluate and improve the reliability of datasets. These frameworks often incorporate a set of predefined metrics, such as accuracy, completeness, consistency, and timeliness, to systematically measure data quality. One common approach is the Total Data Quality Management (TDQM) framework, which focuses on continuous monitoring and improvement of data.

By following these frameworks, analysts can ensure that their data meets high standards, enabling more accurate and trustworthy analysis in fields like equity research.

Excel for Data Analysis and Manipulation

We can use Excel’s powerful tools for data analysis and manipulation to enhance our equity research. From basic features to advanced functionalities like Power Query and pivot tables, we aim to streamline data workflows and improve accuracy.

Leveraging Built-In Excel Features

Excel offers a range of built-in tools for effective data analysis. Filtering and sorting functions help us handle large datasets by focusing on relevant information. Conditional formatting enables easy identification of data trends, making discrepancies more apparent.

Using Excel’s data validation options ensures data integrity by restricting the type of data that can be entered. We often use these features to maintain clean and accurate data, which is essential for producing reliable equity research reports.

Data Manipulation Using Pivot Tables

Pivot tables are a powerful tool for data manipulation. They allow us to quickly summarize and analyze complex datasets by creating custom reports. By grouping and rearranging data, we can derive insights that are otherwise hidden in raw data.

Creating calculated fields in pivot tables helps us perform additional calculations without altering the original dataset. This means we can focus on analyzing patterns and making informed decisions based on comprehensive data views. We find the flexibility of pivot tables indispensable for efficient data transformation.

Advanced Techniques with Power Query

Power Query is Excel’s advanced tool for data transformation and automation. It lets us connect to a wide variety of data sources and perform extensive transformations. By using Power Query, we can automate repetitive tasks such as data cleaning and merging datasets, saving time and reducing errors.

Power Query’s graphical interface makes it easier to perform complex transformations without the need for advanced coding skills. This tool is especially useful for integrating disparate data sources into a cohesive database, boosting the quality and speed of our analysis.

Conditional Formatting to Maintain Data Accuracy

We rely on conditional formatting to highlight specific data points based on predefined criteria. By using this feature, we can ensure that errors and anomalies are quickly identified and corrected. This proactive measure maintains data accuracy, which is crucial for reliable analysis.

For equity research, conditional formatting can signal trends by visually emphasizing key data changes. This quick visual inspection aids in swift decision-making processes, ensuring our reports are both timely and accurate.

Custom Formulas and Add-ins

Custom formulas increase our analytical capabilities beyond Excel’s standard functions. By creating specific formulas tailored to our data needs, we can carry out precise calculations essential for detailed equity analysis.

Excel add-ins, such as those for statistical analysis or financial modeling, expand the software’s capabilities, enabling us to perform more complex analyses without switching to other programs. By integrating these tools, we enhance the robustness and accuracy of our data reporting processes.

Effective Data Validation Strategies

Ensuring data reliability and validity helps us achieve high-accuracy equity research in Excel. We will explore how establishing validation rules and employing automated data cleansing processes can enhance data quality.

Establishing Validation Rules

The first step in maintaining data accuracy is setting up robust validation rules. These rules ensure that the data entered into Excel meets specific criteria. By using Excel’s built-in data validation tools, we can restrict inputs to a valid range, ensure data type consistency, or enforce list-based selections. Creating cross-field validation rules helps check the consistency between related data points. For instance, dates must follow sequential order or numeric values must fall within predefined limits.

Using clear error messages is equally important. When users attempt to enter incorrect data, informative prompts guide them to correct entries. This approach minimizes data entry errors and supports our goal of achieving reliable data for equity research. Validation rules act as a frontline defense against poor-quality data by systematically preventing invalid entries.

Automated validation in Excel using advanced programming or integration with tools like R, significantly improves data quality checks. Using Excel’s VBA, users can create macros to automate repetitive validation tasks, such as checking for missing values or ensuring data consistency across sheets. For more complex statistical validation, Excel can integrate with R, leveraging its powerful statistical libraries to perform advanced checks like outlier detection, regression diagnostics, or data distribution analysis. This integration streamlines the process, allowing equity analysts to ensure their data is not only accurate but also statistically sound.

Automated Data Cleansing Processes

After establishing validation rules, the next step focuses on cleansing existing data to rectify any inconsistencies. Automated data cleansing processes are essential in ensuring existing data adheres to the defined rules and removing errors that might have slipped through. Tools like VBA, PowerApps, and Power Query can automate repetitive cleaning tasks, improve efficiency, and enhance data quality.

Automation tools facilitate specific tasks such as removing duplicates, correcting data formats, and ensuring accuracy in large datasets. By automating, we reduce human error and free up resources for more critical analysis tasks. Through data cleansing, we ensure that the foundation of our equity research remains strong and accurate. This step is vital for maintaining integrity and reliability in our datasets.

Best Practices for Data Integration and Governance

Ensuring that data is both relevant and well-integrated requires ongoing review and strict adherence to documentation standards.

Data Integration Practices

- Unified Data Platforms: Integrating data from various sources into one unified platform ensures consistency and coherence across datasets. By centralizing data, researchers eliminate the risk of discrepancies arising from working with fragmented or siloed information. Unified platforms also make it easier to apply standardized data validation and cleansing techniques, improving overall data quality and ensuring all team members access the same accurate, up-to-date information.

- Automated Workflows: Automated workflows are essential for reducing manual data entry errors and accelerating the data processing cycle. Setting up automated data pipelines can help quickly transform raw data into a usable format, run predefined validation checks, and generate reports.

- Scalable Solutions: As datasets grow, scalable solutions become key. Implementing scalable data integration systems, like cloud-based platforms or modular software, allows analysts to handle increasing volumes of data without performance bottlenecks. These systems should also support advanced analytical tools and accommodate additional data sources, ensuring the research framework can adapt as the scope and complexity of the project expand.

Data Governance Principles

- Clear Policies: Establishing clear data governance policies ensures the responsible use and security of data. These policies should define roles, responsibilities, and procedures for data management, ensuring that all team members understand how to handle sensitive information and comply with regulatory requirements. Frameworks like the Data Management Body of Knowledge (DMBOK) provide a comprehensive approach to data governance, helping organizations develop tailored policies that mitigate risks related to data breaches or misuse.

- Regular Audits: Conducting regular audits helps maintain data accuracy and compliance with established governance policies. Audits should assess data quality, adherence to security protocols, and the effectiveness of data management practices. These evaluations help identify discrepancies, outdated information, or potential vulnerabilities in the data lifecycle.

- Data Stewardship: Data stewards are responsible for maintaining data quality, implementing governance policies, and serving as a point of contact for any data-related issues. They ensure that best practices are followed, facilitate training for team members on data management, and act as champions for data integrity and security.

Adopting a Data-Driven Culture for Research

Incorporating a data-driven culture into research practices means embedding data at the heart of decision-making processes. By doing so, we enhance our ability to make informed decisions, resulting in more robust research outcomes. This shift not only benefits the accuracy of our findings but also optimizes operational efficiency.

To establish such a culture, we must prioritize data quality monitoring. Regular checks and systematic evaluations ensure that our datasets remain accurate and reliable. Identifying and eliminating instances of bad data prevent errors from skewing research insights, saving us time and resources down the line.

Emphasizing a data-driven organization involves training teams across all levels. This means equipping everyone with the skills to interpret and utilize data effectively, fostering an environment where data informs every project stage. As a result, our research becomes more credible and impactful.

Implementing user-friendly tools, such as Excel for data analysis, aids in this transition. Excel’s capacity to handle complex datasets allows us to manage information efficiently and maintain high standards of accuracy.

Advancing Equity Research Through High-Quality Data Practices

Ensuring high-quality data for equity research in Excel is not just about utilizing powerful software. It’s about embedding a culture of data integrity, governance, and continuous improvement within the research process. By using thorough data validation strategies, leveraging Excel’s advanced functionalities, and implementing comprehensive data governance principles, financial analysts can improve the quality of analyses, leading to more informed investment decisions.

Additionally, as the finance sector continues to evolve, staying ahead of the curve requires a commitment to high data standards. Investing in the right tools and frameworks will empower analysts to extract meaningful insights while maintaining the integrity and reliability of their datasets. This commitment to excellence is essential for building trust with stakeholders and driving successful investment outcomes.

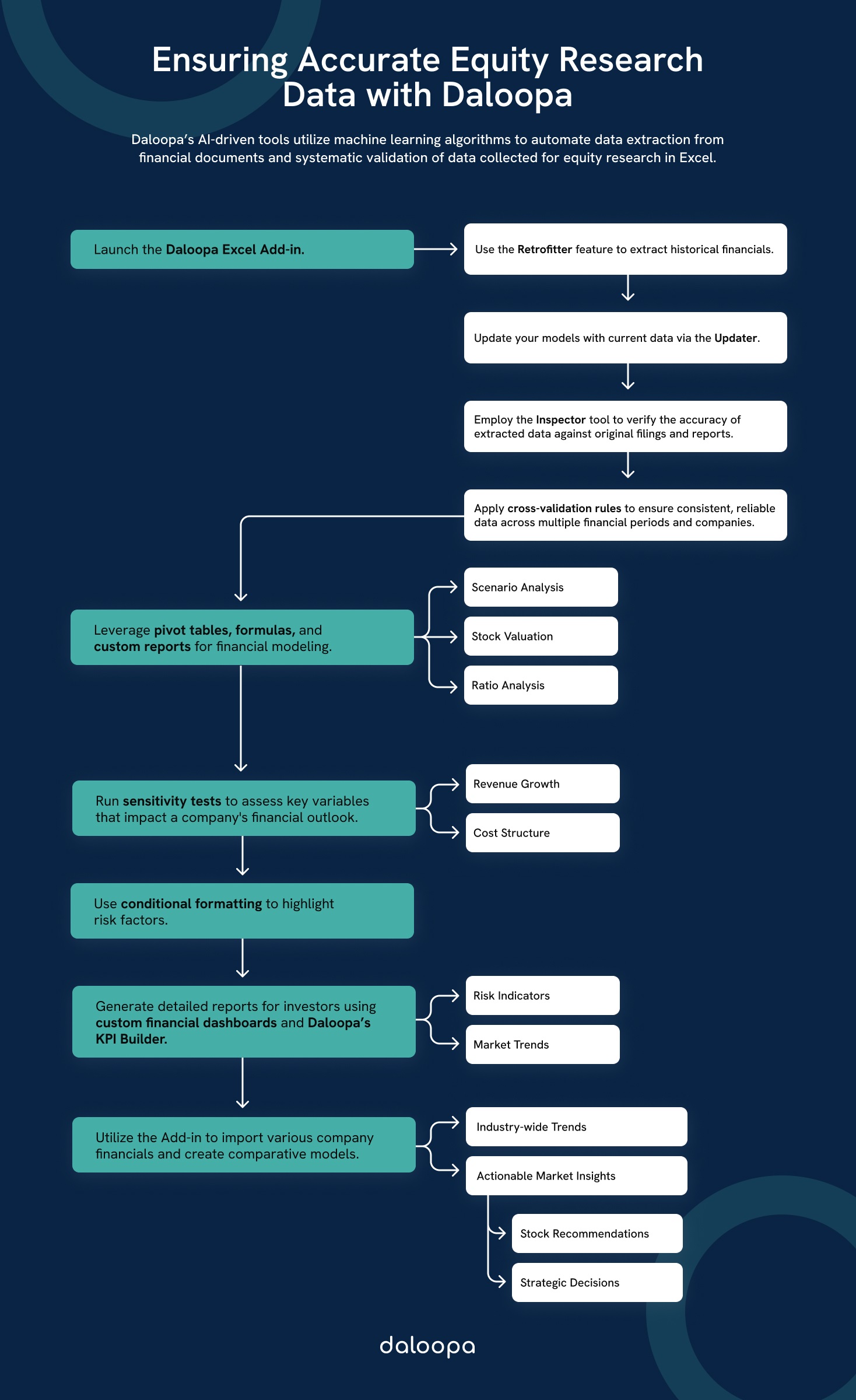

Ready to elevate your research with high-quality data? Choose a Daloopa plan today and join top analysts in enjoying fast and streamlined workflows.